Perfect Your SEM Testing: How & Why To Evaluate Everything: SMX East 2016

Looking for inspiration when it comes to designing and running tests in your PPC accounts? Columnist Aaron Levy recaps a session on this topic from SMX East 2016.

A wiser man (or woman) than I coined the quintessential acronym of paid search. Rather than the ABCs of sales (Always Be Closing), savvy paid search managers live and die by ABT — Always Be Testing.

In a session presented recently at SMX East, three of the brightest in the industry shared their tiers of testing strategies, successes and failures. “Perfect Your SEM Testing: How & Why To Evaluate Everything” featured insights from Ted Ives, owner of Ted Ives Consulting; Jake Pinocchio, a digital marketing associate at Red Ventures; and Susan Waldes, president of Fivemill.

Perfect Your SEM Testing — Ted Ives

Ted Ives kicked the panel off with a focus on data collection. He asserted that the first thing an advertiser or agency should do is to step back and assess the data you need to measure. He stressed that his ultimate goal when he tests is to maximize profit for his clients.

Ives’ testing philosophy is contrarian to my Always Be Testing mantra expressed earlier. It’s important to remember that testing 100 percent means at least 50 percent of it will be underperforming. Instead, Ives recommends evaluating potential negative impact and testers’ workload before structuring a test.

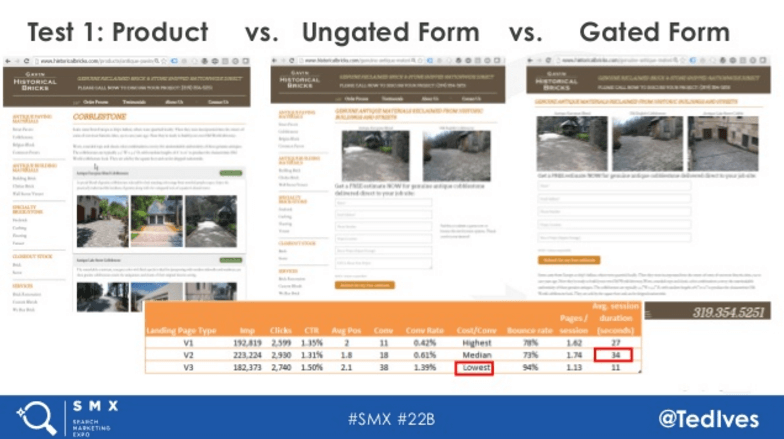

The most prominent test that Ives explored centered around landing pages. He assessed value of a product page relative to specific landing pages. He found the strongest surface-level performer (the gated form) led to less engaged customers and less revenue. He suspected content in a walled garden was a friction point, forcing customers to convert before they were ready.

He believed that many potential customers were actually coming in via a phone call. Echoing the theme of measurement first, he implemented a call-tracking suite to measure impact.

To conclude, Ives reminded us that measuring success often has to go deeper than surface metrics and relies on significant data. While there are a myriad of statistical significance testing tools, they aren’t always correct. His favorite tool for evaluating significance is the tool built by Cardinal Path.

How To Be A Full Funnel Marketer — Jake Pinocchio

Jake Pinocchio was next up. He does testing at Red Ventures, a truly full-service firm that handles leads through every aspect of the sales funnel. So Pinocchio has the opportunity to test every aspect of the marketing funnel to maximize the value of every lead. He walked the audience through four tests, each exploring different parts of a conversion path.

His first example was testing different product tiers for a credit card company. There were two different products available. Product A was simple to get approved for and yielded higher numbers of total users, but at a lower lifetime value. Product B was a premier product that had lower volume but a better long-term customer. Pinocchio found that while the premier product had lower volume, the value per conversion was much higher.

Pinocchio’s second test sought to compare whether a lead form or a call center would better convert potential cable customers. He hypothesized that driving customers to an IVR system would be more efficient than forcing them through forms. Using click-to-call instead of a traditional campaign, he was able to manipulate the conversion path. He was able to shift the proportion of conversions toward the IVR system. While the total number of conversions remained flat, the proportion of calls increased by over 50 percent. The end result was a 20X increase in net profit.

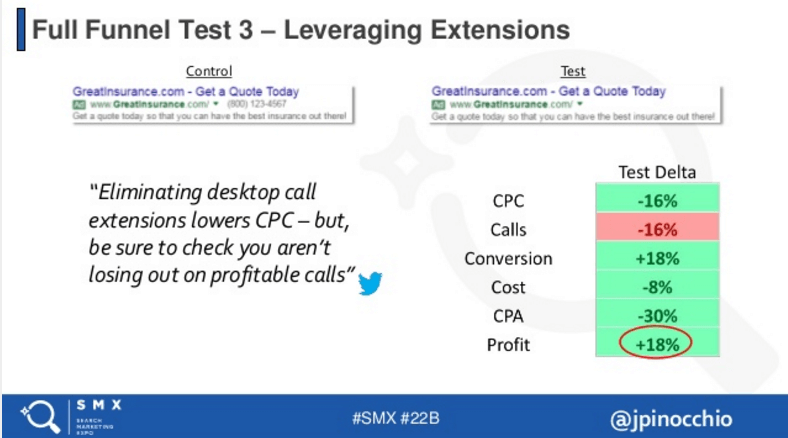

His third test supported a theory that I’ve often wanted to explore. Pinocchio theorized an “Extension Tax,” whereby you wind up paying more for having larger extensions. He theorized that a call extension on desktop ads led to fewer ad clicks, decreasing CTR and punishing quality score.

He removed call extensions from his campaigns, expecting to make up the volume in form conversions and decreased CPCs. The results supported his hypothesis — eliminating call extensions led to a 16-percent decrease in CPCs and a lift in form conversions. While this particular test yielded a lift in profit, Pinocchio cautioned the audience to dig deep. Make sure the front-end metrics support the back end.

His final test sought to explore an age old question: “Does enhanced cost-per-click (ECPC) work?” Pinocchio and his team found that ECPC worked as promised. It yielded an increase in conversion volume at a similar budget. Net profit increased in this particular case, but Pinocchio cautioned that ECPC could backfire. Google doesn’t view total profit metrics — a decrease in back-end revenue is possible.

AdWords Drafts & Experiments (Answering the Age Old SEM Mysteries) — Susan Waldes

Closing the panel was Susan Waldes of Fivemill. Before she got rolling, Susan stressed the importance of understanding what really counts as a test vs. what’s just doing your job.

The most common and valuable form of testing, according to Susan, is good old-fashioned A/B testing. To ease A/B testing, Google’s given us a new tool called Campaign Drafts and Experiments (CDE), which replaces the now-defunct AdWords Campaign Experiments.

The major benefit of using CDE for landing page and ad copy tests is that you can control traffic split. Echoing Ted Ives’ point from earlier, you don’t have to use half of your budget for a test. Waldes recounted an example of when she wanted to explore how crazy traffic swings would be if she doubled her bids. You’d never execute such a test with all or even half of your traffic, but by using CDE, she was able to test on a small scale and adjust.

In addition to campaign testing, Waldes explored the Drafts feature of CDE. Drafts fills a need, allowing you to forecast scenarios and create new, filterable datasets. Drafts also allow convenient sharing of information outside of AdWords editor, a vast improvement over old AES files.

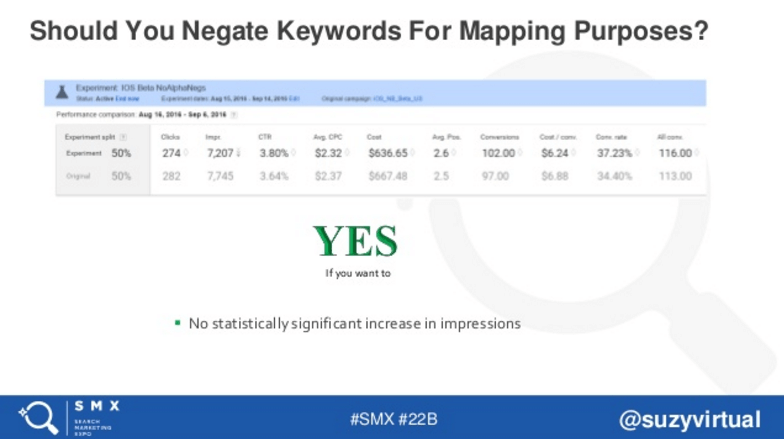

By using CDE, Waldes was able to answer a few of Paid Search’s age-old questions. If you’re running keywords in a few campaigns, Waldes says you can use negatives to direct traffic without a noticeable loss in impressions. In testing CPC against AdWords CPA bidding, Waldes found that manual bidding was more efficient and yielded better leads.

The resounding theme of the session was that as marketers, we need to dig a bit deeper beyond surface metrics. It’s our responsibility to identify the tangible impact of tests, rather than relying on surface metrics.

Opinions expressed in this article are those of the guest author and not necessarily Search Engine Land. Staff authors are listed here.